LLM API Test is an MIT-licensed open-source web tool designed to benchmark and compare the performance of large language model APIs such as GPT-4 and Gemini. It provides real-time metrics like first-token latency, tokens per second (TPS), success rates, and output quality, helping developers and researchers evaluate LLMs for production use, optimization, or academic research.

文章源自resohive.com-https://resohive.com/llm-api-test-open-source-benchmark-tool-for-gpt-4-gemini-more.html

文章源自resohive.com-https://resohive.com/llm-api-test-open-source-benchmark-tool-for-gpt-4-gemini-more.html

🔍 What Makes LLM API Test Unique?

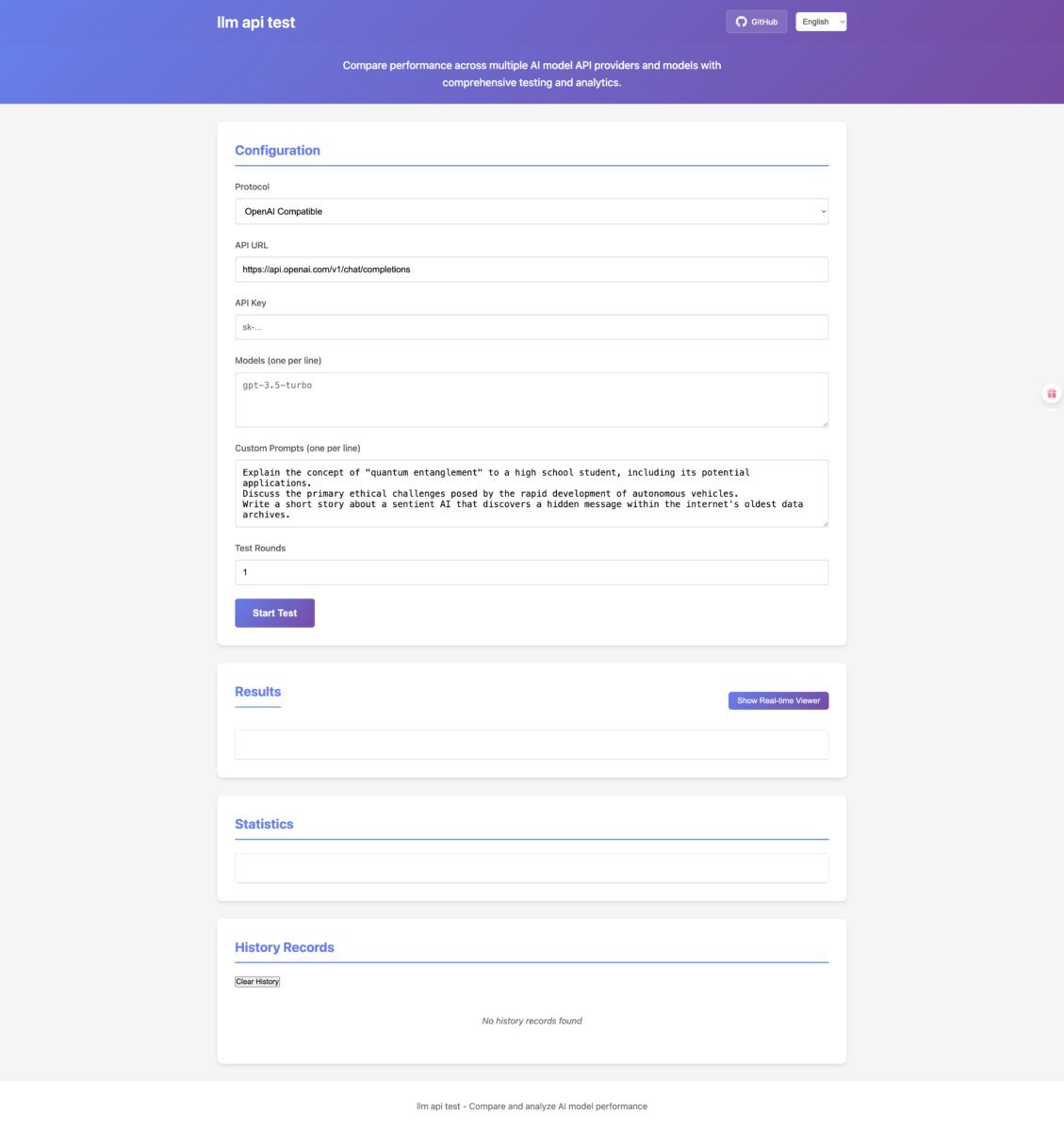

- Protocol Support: Built-in support for OpenAI (GPT-3.5, GPT-4, Turbo) and Google Gemini (Pro, Pro Vision); extendable to any OpenAI-compatible custom endpoint.

- Key Metrics:

- First-token latency: How fast the first token is returned.

- Output speed: Measured in tokens per second (Token/s).

- Success rate: Percentage of successful API calls.

- Output quality: Optional side-by-side comparison of model responses.

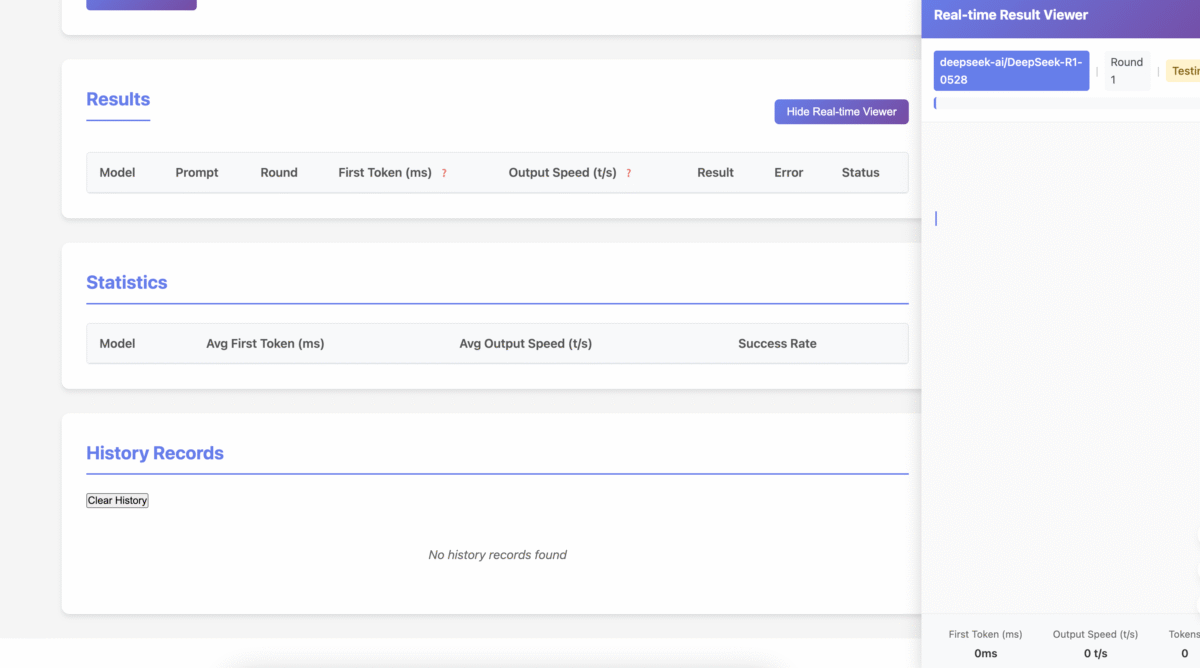

- User Experience: Responsive interface compatible with desktop and mobile. Real-time visual charts update as tests run. Historical logs are stored for trend tracking.

文章源自resohive.com-https://resohive.com/llm-api-test-open-source-benchmark-tool-for-gpt-4-gemini-more.html

文章源自resohive.com-https://resohive.com/llm-api-test-open-source-benchmark-tool-for-gpt-4-gemini-more.html

🚀 Why Benchmark LLM APIs?

As ultra-low-latency models like GPT-4.1 nano emerge, performance becomes a competitive advantage. Projects like lmspeed.net reveal global API response time differences, encouraging developers to evaluate:文章源自resohive.com-https://resohive.com/llm-api-test-open-source-benchmark-tool-for-gpt-4-gemini-more.html

- Vendor infrastructure performance

- Latency across regions

- Trade-offs between quality and cost

Leading organizations such as NVIDIA and MLCommons have launched initiatives like GenAI-Perf and MLPerf Client 1.0 to standardize evaluation tools. LLM API Test complements these efforts with a practical, easy-to-use frontend.文章源自resohive.com-https://resohive.com/llm-api-test-open-source-benchmark-tool-for-gpt-4-gemini-more.html

📌 Typical Use Cases

- Vendor Selection: Compare models like GPT-4 Turbo vs Gemini Pro in terms of speed, quality, and pricing to guide procurement.

- Cost-Performance Optimization: Follow guides like TechRadar’s 2025 GenAI report to balance speed, quality, and API cost with real data.

- Academic Research: Validate performance claims made in LLM studies, benchmark tool use (LangChain, Toolformer, etc.).

⚙️ Deployment & Setup

- Local Setup:

git clone https://github.com/qjr87/llm-api-test cd llm-api-test npm install npm startVisit

http://localhost:8000in your browser. - Static Hosting: Deploy on Vercel, Netlify, or GitHub Pages. A simple

Dockerfileis included for containerized deployment.

🧪 How to Run Your First Test

- Go to the Configuration panel.

- Select the LLM protocol (OpenAI or Gemini) and paste your API URL & key.

- Specify models to test (e.g.,

gpt-4,gemini-pro). - Set test rounds, concurrency, and prompt content.

- Click Start Test and watch real-time metrics unfold!

🔗 Official Links

- Live Demo: https://llmapitest.com

- GitHub Repository: https://github.com/qjr87/llm-api-test

✅ Conclusion

LLM API Test is the go-to tool for developers, researchers, and businesses needing insight into large language model API performance. Whether you're fine-tuning your app’s latency or preparing a paper on AI inference efficiency, this open-source project gives you the tools you need—visually, reliably, and transparently.文章源自resohive.com-https://resohive.com/llm-api-test-open-source-benchmark-tool-for-gpt-4-gemini-more.html 文章源自resohive.com-https://resohive.com/llm-api-test-open-source-benchmark-tool-for-gpt-4-gemini-more.html

2. All resources and articles on this site are collected and organized from the internet, and we do not participate in their creation. The content may contain watermarks or promotional information, and users are advised to make their own judgments.

3. The resources are for research and educational purposes only. For commercial use, please purchase the official license; otherwise, the consequences will be borne by the user.

4. If the content infringes upon your legal rights, please contact us for removal.

:3-MB-绿色单文件,永久免费硬件信息查看神器-Featured-Image-300x200-1.jpg&w=280&h=210&a=&zc=1)